Portal Foundation

PortalOS

Your locally-hosted AI command-line interface for enhanced privacy and productivity.

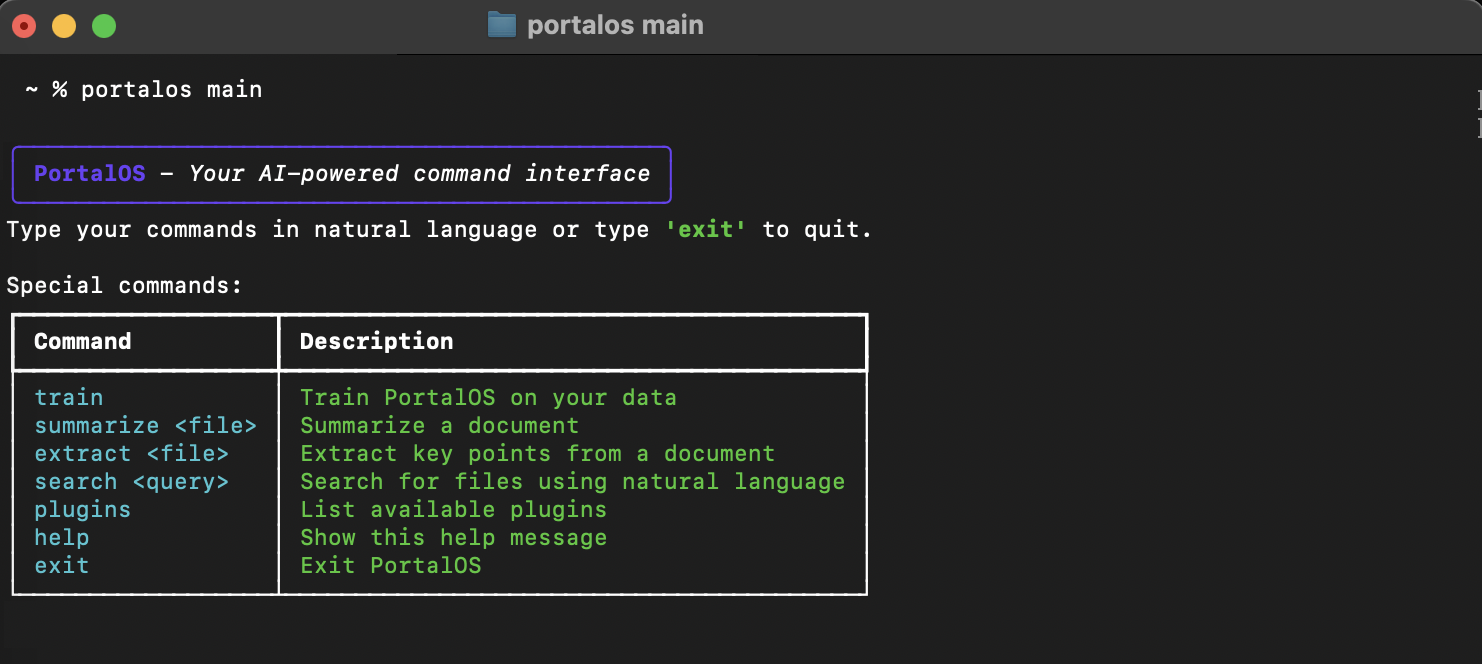

PortalOS: Your Local AI Command-Line Interface

Overview

PortalOS is a locally-hosted AI command-line interface that enables users to interact with their files and system through natural language. It processes commands locally, preserving privacy while offering powerful file search, summarization, and system control capabilities—all wrapped in an intuitive interface that makes AI accessible to everyone.

Core Features

Natural Language Interface

- Intuitive command processing using everyday language

- Context-aware conversations and command history

- Intelligent query understanding and interpretation

- Custom command creation and automation

Local Processing & Privacy

- All AI processing runs on your machine, preserving privacy

- No data sent to external servers or cloud services

- Secure handling of sensitive documents and information

- Complete control over your data and how it's used

Flexible Model Options

- Ollama integration for powerful open-source models

- llama.cpp support for lightweight, optimized performance

- Custom model implementation for specialized use cases

- Automatic model selection based on hardware capabilities

Advanced Document Processing

- Multi-format document support (PDF, Markdown, text, etc.)

- Intelligent document summarization and key point extraction

- OCR capabilities for scanned documents using Tesseract

- Document comparison and analysis tools

File Operations & Search

- Natural language file search and discovery

- Semantic search across document content

- Smart file organization suggestions

- Batch file operations with simple commands

System Control & Automation

- Execute system commands through natural language

- Schedule and automate recurring tasks

- Monitor system performance and health

- Create custom workflows and automation scripts

Technology Infrastructure

AI Engine Architecture

- Language model integration framework

- Command parsing and context management

- Modular processing pipeline

- Extensible plugin system

- Efficient memory and resource management

Knowledge Management

- Document processing and extraction

- Personal data training capabilities

- Retrieval-augmented generation (RAG)

- Information relevance scoring

- Contextual knowledge organization

System Integration

- Low-level OS interaction capabilities

- Process management and monitoring

- Resource allocation optimization

- Cross-platform compatibility layer

- Background service management

Model Options

Ollama Backend

- Support for models like Phi, Llama, Mistral, and more

- Easy installation and management

- Model serving with REST API

- Optimized for modern hardware (8GB+ RAM)

- Simple model switching and updating

llama.cpp Backend

- Highly optimized for older and resource-constrained hardware

- Low memory footprint and efficient processing

- Support for quantized models for better performance

- Command-line model management

- Advanced inference optimization

Custom Model Backend

- Integration with specialized local models

- Support for domain-specific fine-tuned models

- Custom model serving and configuration

- Advanced parameter tuning options

- Specialized model architecture support

Use Cases

Personal Productivity

- Document organization and management

- File search and discovery

- Meeting notes and summaries

- Personal knowledge management

- Task automation and scheduling

Knowledge Work

- Research assistance and summarization

- Content extraction from various sources

- Document analysis and comparison

- Technical documentation search

- Information synthesis and organization

Development & System Administration

- Code documentation and explanation

- System management and monitoring

- Configuration and troubleshooting

- Log analysis and debugging

- Development environment setup

Privacy-Conscious Users

- Sensitive document processing

- Local data analysis without exposure

- Controlled AI usage without data sharing

- Personal information management

- Private research and analysis

Future Development

Phase 1: Foundation

- Core command-line interface

- Basic document processing

- Ollama integration

- Essential file operations

- System command execution

Phase 2: Enhancement

- Advanced document processing

- Multiple model backend support

- Improved context management

- Plugin system implementation

- Task automation framework

Phase 3: Innovation

- Personal data training capabilities

- Advanced semantic search

- Multi-modal support (image, audio)

- Advanced automation workflows

- Cross-device synchronization

Installation & Setup

Prerequisites

- Choice of model backend (Ollama, llama.cpp, or custom)

- Python 3.8+ environment

- Basic system requirements vary by chosen model

- Standard development tools for your platform

Configuration

- Customizable model selection and parameters

- Directory and file access permissions

- Plugin and extension management

- Performance and resource allocation settings

- Personal data training options

Community & Support

Documentation

- Comprehensive user guides

- Model selection recommendations

- Command reference and examples

- Plugin development tutorials

- Troubleshooting resources

Contribution Opportunities

- Open-source development

- Plugin creation and sharing

- Documentation improvements

- Model optimization techniques

- Use case sharing and templates

PortalOS brings the power of AI to your local environment, enabling powerful document processing, system control, and productivity enhancements—all while maintaining complete privacy and control over your data. By running entirely on your local machine with flexible model options, PortalOS makes advanced AI capabilities accessible regardless of your hardware constraints.